This setup offers a great workaround for efficient performance. Please check the new article about libcamera usage for motion, this one might be outdated. Go check here. The new version might also work on Bullseye,

This setup offers a great workaround for efficient performance.

Please check the new article about libcamera usage for motion, this one might be outdated. Go check here. The new version might also work on Bullseye, but I did not test it yet.

The Debian Bullseye release for the 64-bit Raspberry Pi OS includes libcamera, but the motion software designed for Raspberry camera modules isn’t fully compatible with the libcamera software stack. This issue is particularly pronounced with the new camera module version 3, which no longer supports the legacy camera software.

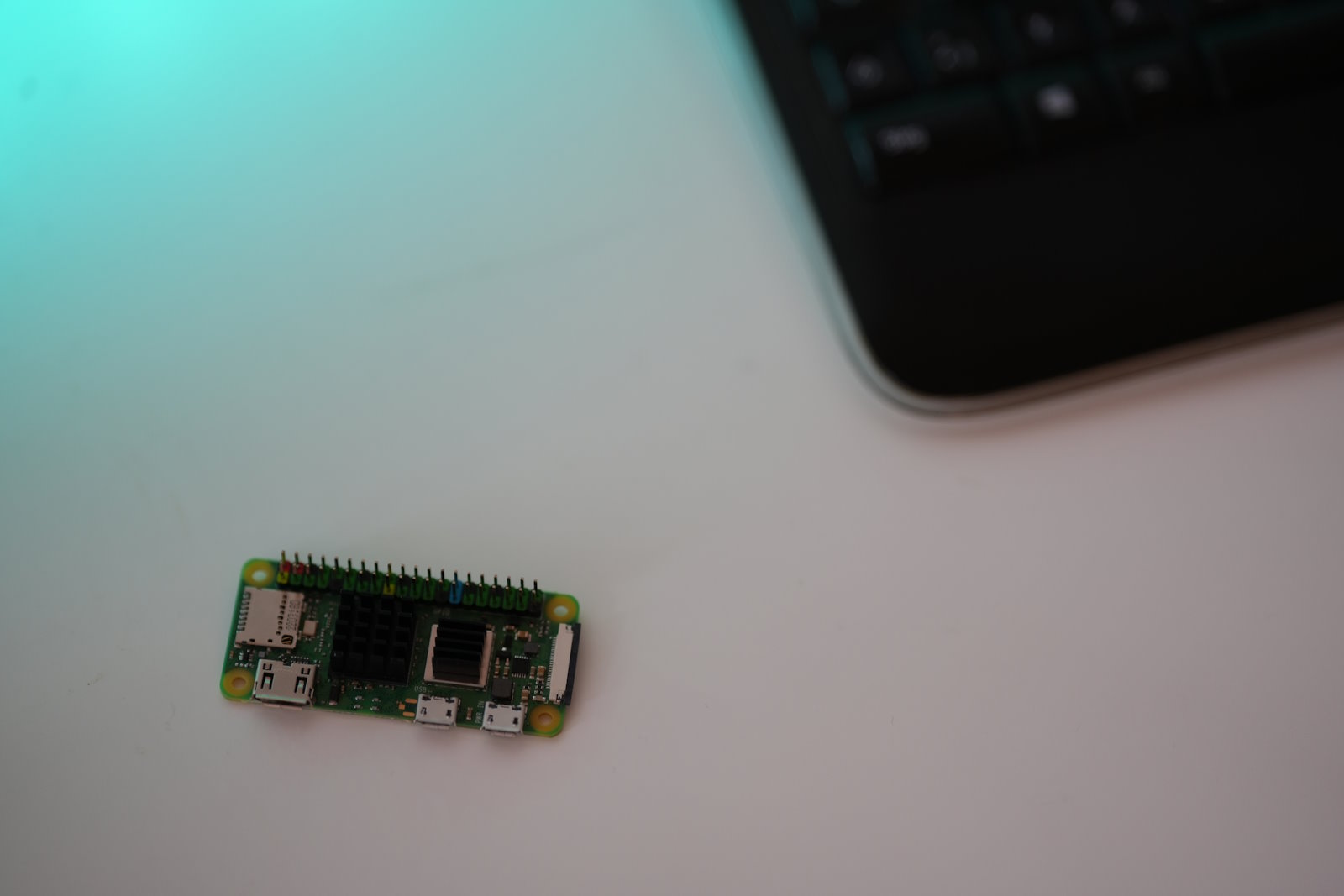

Although motion served reliably for many years, it fails to detect the camera in the new configuration. Fortunately, given the pathway from the camera sensor through the libcamera software to motion and storage on your hard drive, creating custom solutions by combining different features with system standards is relatively straightforward. All you need is a Raspberry Pi, the Debian Bullseye 64-bit OS, the new camera module v3, the system’s ffmpeg, and the Nginx web server with the RTMP module installed.

TLDR. I leverage the libcamera component to access the camera’s direct h264 output, store it in a fifo, and encode this fifo with a direct stream copy (meaning no CPU-intensive re-transcoding!) using ffmpeg into an FLV stream. I then record this stream over Nginx/RTMP into motion via the netcam_url camera configuration, either on a local machine or even a remote one with the motion software installed.

With this approach, it becomes possible to obtain a direct h264 stream from the camera in Full HD at 30 frames per second with high quality. This stream is packed with ffmpeg without any reencoding (neither CPU nor GPU), delivering it to motion through a reliable streaming format. There are no incompatibilities, minimal resources are required, and both CPU and GPU remain cool, eliminating libcamera problems with motion. Everything works seamlessly.

Here’s my initial script that captures the h264 stream directly from the camera and passes it to ffmpeg:

mkfifo /home/motion/stream

screen -dm libcamera-vid -t 0 --width 1920 --height 1080 --codec h264 -n --vflip --hflip --hdr 1 --profile=high --framerate 24 -o /home/motion/stream

screen -dm ffmpeg -y -i /home/motion/stream -s 1920x1080 -r 24 -c:v copy -b:v 1500k -maxrate 5000k -bufsize 10000k -g 60 -f flv rtmp://nginx.domain.or.ip/live/test;

The camera is then configured in the /etc/motion/camera1.conf file like this:

# /usr/etc/motion/camera2.conf

#

# This config file was generated by motion 4.5.0+git20221030-28de590

###########################################################

# Configuration options specific to camera 2

############################################################

# User defined name for the camera.

camera_name PiCam

# Numeric identifier for the camera.

camera_id 1

# The full URL of the network camera stream.

#netcam_url http://yourcamera2ip:port/camera/specific/url

netcam_url rtmp://IP-OR-DOMAIN-OF-NGINX-STREAM:1935/live/test

netcam_userpass test:test

# Image width in pixels.

width 1920

# Image height in pixels.

height 1080

# Text to be overlayed in the lower left corner of images

text_left PiCam

# Text to be overlayed in the lower right corner of images.

text_right PiCam\n%Y-%m-%d\n%T-%q

text_scale 2

# File name(without extension) for movies relative to target directory

movie_filename PiCam_%t-%v-%Y%m%d%H%M%S

The nginx server needs to have the rtmp module installed to build the RTMP streaming server:

Read here how to.

My config part for the nginx.conf looks like this:

rtmp {

server {

listen 1935;

timeout 60s;

notify_method post;

chunk_size 8192;

application live {

live on;

record off;

}

Disadvantages of this method:

- You have to start different softwares with different scripts (libcamera, ffmpeg, nginx and motion)

- You need a stable network for the video stream(s) if you run it on separate machines

- Finding setup or stream errors might be more complicated

- Due to the RTMP streaming, a few seconds of delay can occur

Advantages of this workaround:

- This method does not use a lot of resources

- Only standard system software is used

- The stream(s) work reliable

- No incompatibilities with motion software

- You can easily record more than one stream or camera with motion. Tested with 4 parallel.

- System hardware and camera stays cool and work stable 24/7

- The motion or nginx software can run on a local or any remote machine to split workloads

- I also mix in other camera types like a Mevo and some USB cameras

- You can also tune into the stream without motion via vlc for example

The new Raspberry camera module v3 really increased quality in low light conditions and the autofocus keeps the image sharp. With the above workaround, I did not have to set any boot.config settings and did not alter the raspi-config at all. Everything works straight out of the box.